Robots with vision

As a professor of robot vision, Andrew Davison’s research focuses on developing the computer vision algorithms that will give robots the chance to move beyond a controlled lab and into the real world.

When he was completing his D.Phil at Oxford University, Professor Davison developed one of the first robots that could map its surroundings using vision as its primary sensor, with two video cameras providing real time awareness of the world.

When he was completing his D.Phil at Oxford University, Professor Davison developed one of the first robots that could map its surroundings using vision as its primary sensor, with two video cameras providing real time awareness of the world.

But Davison felt the success of that project was too dependent on the specific robot, so he decided to simplify the visual system so it could work anywhere and on any device.

Since moving to Imperial in 2005, Davison has refined and developed this technology, with the goal of finding mass market applications, from gaming interfaces on mobile phones to driver aids in road vehicles.

Since moving to Imperial in 2005, Davison has refined and developed this technology, with the goal of finding mass market applications, from gaming interfaces on mobile phones to driver aids in road vehicles.

Real-time visual navigation by a mobile robot or camera poses the fundamental but coupled challenges of estimating where you are and building a map of your surroundings.

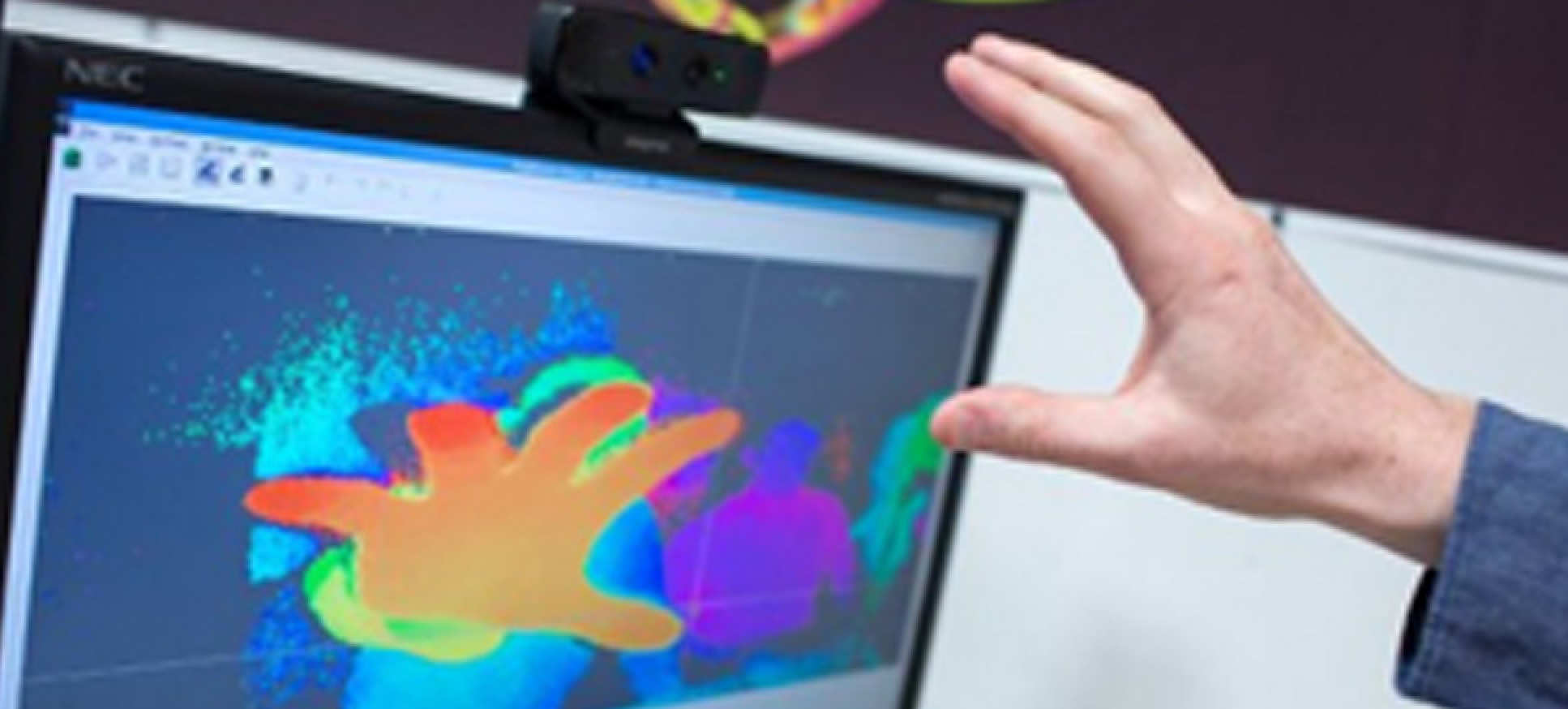

This is known in the robotics field as simultaneous localization and mapping (SLAM). Most previous approaches to SLAM had multiple cameras or other expensive sensors, but Davison’s breakthrough simplified the system to achieve SLAM with just a single video camera and real-time processing.

Despite the complexity involved in its real-world application, Davison hopes to develop an affordable SLAM system that can be added to other devices. The goal is a commodity device with unlimited uses: film production, wearable sensors, architect design tools, home help for the elderly.

Two areas where Davison predicts rapid progress include augmented reality for mobile gaming and driver aids in autonomous road vehicles. Already, Davison and colleagues have shown that the images from a rear-parking camera can be used to estimate a vehicle position and speed in real time.

As the industry adds more and more driver aids to vehicles, better visual sensors and processors will be needed. A simple SLAM system that uses existing vehicle cameras can supplement on-board GPS and improve driver safety.

In mobile gaming, a SLAM system can enable augmented reality so that a mobile phone gamer can see virtual characters in video taken by their phone’s camera. Imagine, for example, video characters running around your desk or your bedroom.

This sort of SLAM application only becomes attractive when it runs in real-time. It is more interesting if it’s interactive, says Davison, who hopes that widescale adoption will become feasible in the next five to eight years, as the required computing power is already affordable for many consumer devices.

Still, Davison hasn't given up on robots. Real-world robotics is an industry that doesn't exist yet, says Davison, aside from a few robot vacuum cleaners. He foresees the day when robots can do really useful tasks, such as sorting your dirty laundry and other domestic chores. But that revolution requires much more capable robotics systems, he says.

In his latest research, Davison is pursuing real-time dense modeling of the world, going beyond maps to reconstructing surfaces and objects. This level of semantic understanding combined with advances in robot manipulation could result in new applications for domestic robots. That is the really exciting industry of the future, says Davison.